In the quest to build more intelligent and efficient artificial intelligence systems, researchers are finding inspiration in an unexpected place – the tiny brains of insects. Despite having neural networks comprised of only a million neurons or less (compared to our 86 billion), insects display remarkable capabilities in navigation, pattern recognition, and decision-making that put our most advanced AI to shame in terms of efficiency and adaptability. This emerging field, sometimes called computational entomology, represents a fascinating intersection of biology and computer science that could revolutionize how we approach machine learning and robotics. By studying how insects achieve complex behaviors with minimal neural hardware, scientists are uncovering principles that may help overcome current limitations in artificial intelligence and lead to smarter, more efficient machines.

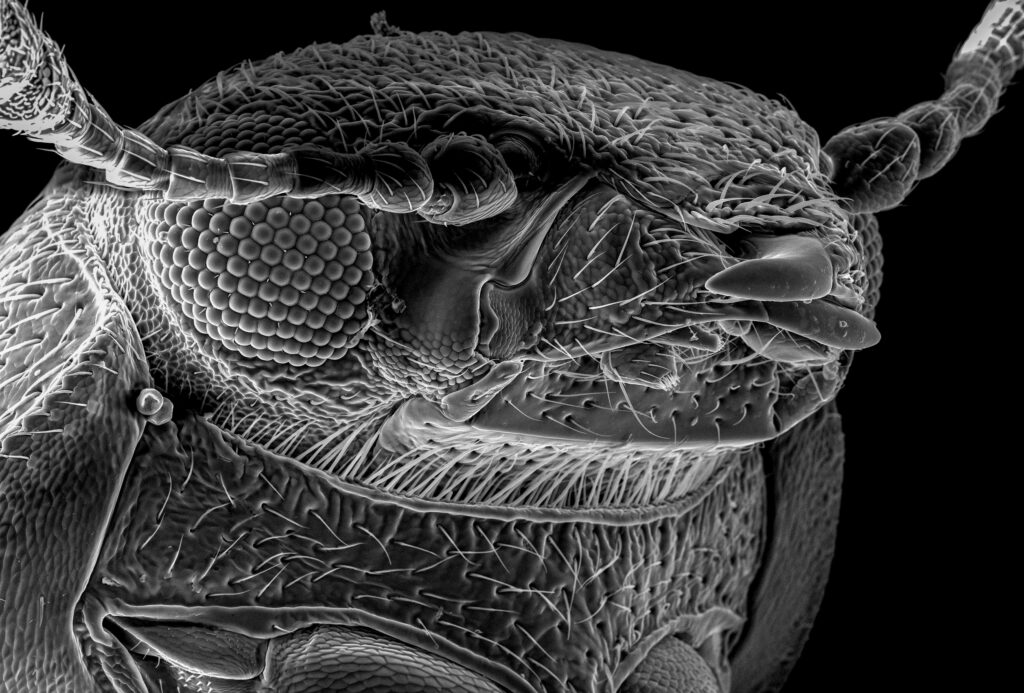

The Remarkable Efficiency of Insect Brains

Insect brains operate with astonishing efficiency, accomplishing complex tasks with minimal neural resources. A honeybee brain contains roughly one million neurons, yet these insects can navigate over six miles from their hive, remember complex floral patterns, communicate through sophisticated dances, and make collective decisions. Fruit flies, with even smaller brains of about 100,000 neurons, can execute precise flight maneuvers, learn from experiences, and exhibit complex courtship behaviors. This neural efficiency stands in stark contrast to modern AI systems like GPT-4, which require billions of parameters and massive energy consumption to function. The gap highlights a fundamental truth: evolution has optimized insect brains for maximum functionality with minimal resources, a principle that AI researchers are increasingly eager to understand and replicate.

Navigation Without GPS: Insect Spatial Intelligence

Insects demonstrate remarkable navigational abilities that put our most sophisticated robots to shame. Desert ants can venture up to half a mile from their nest in random patterns, then return in a perfectly straight line without using landmarks, trails, or GPS. Monarch butterflies complete multi-generational migrations spanning thousands of miles with pinpoint accuracy. Researchers have discovered that many insects use a combination of celestial cues, polarized light patterns, magnetic fields, and visual landmarks integrated through specialized neural circuits. Unlike traditional AI navigation systems that require detailed maps and constant sensor updates, insects use elegant computational shortcuts and minimal sensory inputs. This efficiency has inspired biomimetic navigation algorithms for drones that can operate without GPS, including the “Desert Ant Algorithm” that mimics how Cataglyphis ants track their position relative to home using simple vector calculations rather than complex mapping.

Pattern Recognition with Minimal Hardware

Insects excel at recognizing relevant patterns despite their limited neural resources, offering valuable insights for machine learning. Honeybees can identify complex visual patterns, distinguish human faces, and even understand abstract concepts like “sameness” and “difference” with fewer than a million neurons. Researchers at the University of Sheffield discovered that bees accomplish this through specialized “recognition circuits” that extract only the most essential features from visual inputs, drastically reducing computational requirements. This contrasts sharply with modern deep learning systems that typically require millions of training examples and billions of parameters to achieve comparable recognition tasks. Studies of dragonfly vision have been particularly influential, revealing how these insects track prey with near-perfect accuracy using just 16 specialized neurons that predict prey movement. This discovery has inspired more efficient computer vision algorithms for autonomous vehicles that require significantly less processing power while maintaining performance.

Sparse Coding: Nature’s Data Compression

Insect brains employ a neural strategy called “sparse coding” that maximizes efficiency and has profound implications for AI design. In sparse coding, only a small fraction of neurons activate in response to any given stimulus, creating an information-dense yet energy-efficient representation. Researchers at the Janelia Research Campus found that in fruit fly brains, less than 5% of neurons in their mushroom bodies (learning centers) fire in response to any particular odor, yet this sparse representation contains all information needed for recognition and appropriate response. This contrasts with conventional neural networks where most neurons activate for each input, consuming tremendous energy. AI researchers have begun implementing sparse coding principles in what’s called “neuromorphic computing,” creating chips that mimic insect neural efficiency. IBM’s TrueNorth and Intel’s Loihi neuromorphic chips have demonstrated up to 1,000 times better energy efficiency than conventional processors when running sparse coding algorithms inspired by insect brains.

Swarm Intelligence: Collective Problem-Solving

Social insects demonstrate how relatively simple individual agents can produce remarkably intelligent collective behaviors, providing powerful models for distributed AI systems. Ant colonies solve complex optimization problems when foraging, consistently finding the shortest paths to food sources through a process called stigmergy, where individuals modify their environment (by depositing pheromones) to influence others’ behaviors without direct communication. Honeybee colonies make democratic decisions when selecting new hive locations through a sophisticated consensus-building process involving scout bees and waggle dances. These natural algorithms have inspired numerous AI applications, including Ant Colony Optimization algorithms now used to route telecommunications networks, schedule factory operations, and optimize supply chains. Similarly, Particle Swarm Optimization, inspired by bird flocking and insect swarming, allows multiple simple computational agents to efficiently search complex solution spaces, often outperforming traditional optimization methods in dynamic environments.

Neural Shortcuts: Elegant Simplicity in Decision-Making

Insects have evolved elegant neural shortcuts that bypass complex computations, offering valuable lessons for streamlining AI. Dragonflies intercept flying prey with over 95% accuracy using a specialized target-tracking system of just 16 neurons that implements a remarkably simple algorithm called proportional navigation. This same principle, when discovered in insects, was already being used in missile guidance systems, though engineers had arrived at it through complex mathematics. Researchers at Caltech discovered that fruit flies make split-second flight corrections using specialized sensory-motor circuits that effectively implement mathematical operations, bypassing the need for explicit calculations. These “embodied cognition” approaches, where intelligent behaviors emerge from the direct coupling of sensory inputs to motor outputs without intervening computation, could revolutionize robotics. The “RoboBee” project at Harvard University has already implemented such principles in micro-robots, creating insect-sized flying machines that navigate using simplified visual algorithms modeled on bee optic flow processing rather than traditional computer vision.

Mushroom Bodies: Natural Learning Centers

The mushroom bodies, distinctive structures in insect brains, represent specialized learning and memory centers that AI designers are increasingly studying. These structures, named for their mushroom-like appearance, are crucial for associative learning, sensory integration, and memory formation in insects. Honeybees with damaged mushroom bodies struggle to learn floral scents associated with nectar rewards, while intact bees can learn these associations after just a few experiences. Researchers at the University of Sussex have mapped the neural circuitry of these structures, revealing a remarkably efficient architecture where incoming sensory information is transformed into sparse representations before being associated with reward signals. This architecture has inspired a new class of artificial neural networks called “Mushroom Body Models” that excel at rapid learning from limited examples. Unlike conventional deep learning systems requiring thousands of training examples, these models can learn associations from just a handful of experiences, similar to how insects quickly learn which flowers provide nectar after visiting just a few blooms.

Multimodal Integration Without Massive Processing

Insects seamlessly integrate multiple sensory inputs to create coherent representations of their world, doing so with remarkable neural efficiency that AI designers envy. A foraging honeybee simultaneously processes visual, olfactory, tactile, and motion information to identify flowers, navigate landscapes, and return to its hive, all while monitoring wind conditions and potential threats. This multimodal integration occurs through specialized hub neurons that receive inputs from different sensory processing regions, creating unified representations without requiring massive computational resources. Researchers at the Max Planck Institute identified that cockroaches integrate visual and tactile information through fewer than 200 specialized neurons, allowing them to navigate complex environments even with partial sensory information. By contrast, current multimodal AI systems require enormous neural networks and computational resources to achieve similar integration capabilities. These findings have inspired more efficient multimodal neural network architectures that use convergent processing pathways modeled after insect brains, reducing computational requirements while maintaining performance in tasks requiring integration of vision, audio, and other sensory inputs.

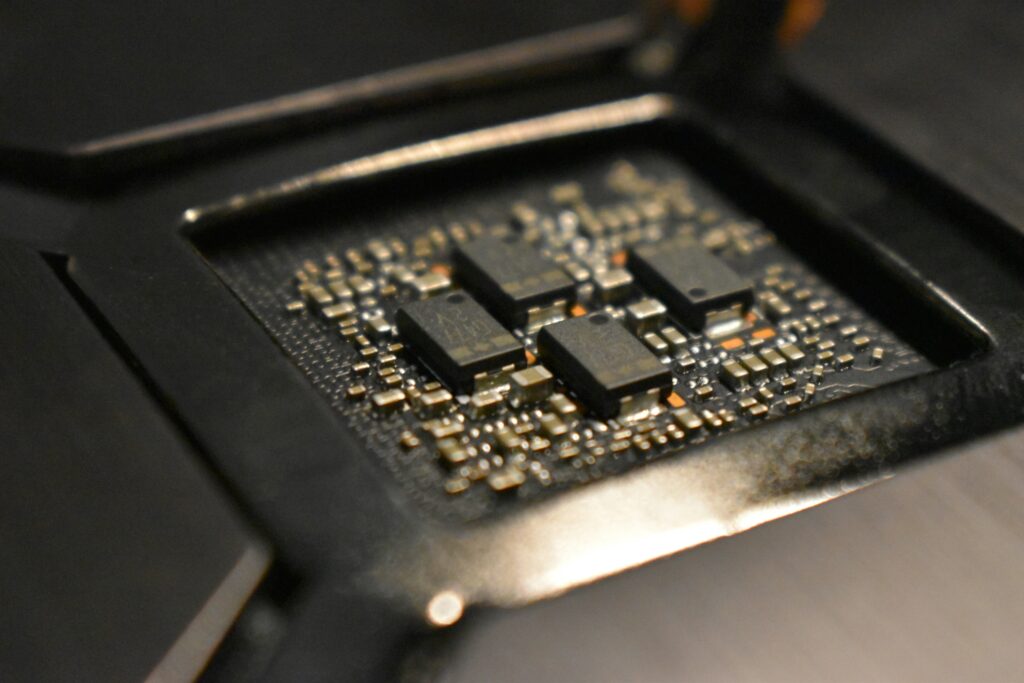

Neuromorphic Hardware: Building Bug-Inspired Chips

The energy efficiency and computational elegance of insect brains have directly inspired a new generation of computer chips designed to process information more like biological neural networks. Traditional von Neumann computing architectures, with separate memory and processing units, create bottlenecks that limit efficiency and speed, particularly for neural network computations. Neuromorphic chips like Intel’s Loihi and IBM’s TrueNorth fundamentally reimagine computing by implementing neuron-like processing elements connected by synapse-like communication channels, often directly inspired by insect neural circuits. These chips can run neural networks while consuming mere watts of power instead of the hundreds required by GPU-based systems. The European “BrainScaleS” project has developed neuromorphic hardware specifically modeling insect mushroom bodies, creating systems that can learn from few examples with minimal energy. Perhaps most impressively, researchers at the University of Washington have created “smelling” robots using neuromorphic chips programmed with algorithms derived from insect olfactory systems, allowing them to track odor sources more effectively than conventional approaches, with potential applications in detecting gas leaks, explosives, or survivors in disaster scenarios.

Predictive Coding and Attention Mechanisms

Insects employ sophisticated predictive coding and attention mechanisms to process only the most relevant information, principles increasingly valuable for efficient AI. Rather than processing all sensory information equally, insect brains filter inputs based on behavioral relevance and predictive value. Dragonfly visual systems contain specialized “target detection” neurons that selectively respond to prey-like objects while ignoring background visual noise, implementing a form of attention that prioritizes processing resources. Researchers at the University of Adelaide discovered that honeybee brains actually predict expected sensory inputs and primarily process deviations from these predictions, dramatically reducing neural processing requirements. These principles have inspired more efficient computer vision systems that use insect-like predictive coding and attention mechanisms to process video streams using a fraction of the computational resources of conventional approaches. Autonomous drone systems using these biologically-inspired attention algorithms can track moving objects more reliably while consuming less power, and machine learning models incorporating predictive coding principles show improved robustness to noise and distortions compared to conventional approaches.

Adaptive Learning with Limited Examples

Perhaps one of the most valuable lessons from insect cognition is their ability to learn effectively from very limited experiences, a capability current AI systems largely lack. Honeybees can learn to associate specific floral scents with food rewards after just a single exposure, a form of one-shot learning that stands in stark contrast to deep learning systems requiring thousands or millions of training examples. Researchers at the University of Sussex identified that this rapid learning capability stems from a specialized dopamine-based reward system in the insect brain that triggers strong synaptic changes after significant experiences. Moth brains show remarkable ability to generalize from limited examples, recognizing flower variants they’ve never encountered based on partial feature matches with previously rewarding flowers. These capabilities have inspired new machine learning approaches like “insect-inspired few-shot learning” algorithms that can recognize new object categories from just a handful of examples. Google’s DeepMind has incorporated principles from insect learning into systems that can learn new tasks with significantly fewer examples than conventional approaches, representing a significant step toward more adaptable artificial intelligence.

Resilience Through Simplicity

Insect neural systems demonstrate remarkable resilience to damage and environmental changes, a property increasingly valued in artificial systems. Honeybees maintain navigational abilities even after losing significant portions of their brain, and locusts can continue coordinated flight despite substantial neural damage. This resilience stems partly from distributed processing and redundancy, but also from the inherent simplicity of many insect neural circuits. Researchers at the University of Edinburgh discovered that insect brains often implement robust, fault-tolerant algorithms that prioritize reliability over precision, allowing continued functionality even under sub-optimal conditions. This contrasts sharply with many AI systems that fail catastrophically with even minor perturbations to their inputs or architecture. Drawing inspiration from these principles, researchers have developed more robust neural network architectures that maintain performance despite simulated neuron “deaths” or noise in the system. Self-driving vehicle systems incorporating insect-inspired resilience principles have demonstrated the ability to maintain safe operation even when key sensors fail, representing a crucial advance for deployment in real-world conditions where perfect reliability cannot be guaranteed.

The Future of Bug-Inspired AI

The convergence of entomology and artificial intelligence represents a growing frontier with tremendous potential for future innovation. As our understanding of insect neurobiology deepens through advanced imaging and recording techniques, we gain more precise insights into the elegant solutions evolution has discovered. Companies like Opteran Technologies are already commercializing insect-inspired navigation and obstacle avoidance systems for drones and robots that operate on a fraction of the power required by conventional approaches. Beyond efficiency gains, insect-inspired approaches may offer entirely new capabilities for artificial systems, such as the remarkable adaptability and generalization abilities seen in natural systems. Some researchers envision hybrid systems that combine the efficiency and specialization of insect-inspired algorithms with the raw processing power of conventional AI, creating systems that can handle both specialized tasks and general problems. As climate change and energy concerns grow more pressing, the incredible efficiency of insect cognition may provide not just interesting research directions, but essential solutions for developing sustainable, powerful AI that can operate within our planet’s resource constraints.

The study of insect brains reveals nature’s solutions to computing challenges that have evolved over hundreds of millions of years. While modern AI systems have achieved remarkable capabilities through sheer computational power, they still fall short of the efficiency, adaptability, and resilience displayed by even the humblest insect brain. By reverse-engineering the principles that allow these tiny neural systems to achieve so much with so little, researchers are developing new approaches to artificial intelligence that could break through current limitations. As this field continues to evolve, the gap between biological and artificial intelligence may narrow, not because machines become more like human brains, but because they incorporate the elegant efficiency of the insects that have thrived on Earth far longer than we have. In our quest to build better machines, it seems the smallest brains may offer some of the biggest insights.